|

I am a researcher at OpenAI, where I work on the Applications Research Team. I have worked on our early robotics efforts, and more recently on enhancing the performance of our reasoning models outside the canonical domains of math and coding. I was previously on leave from my PhD at the Robotics Institute at Carnegie Mellon University, where I was advised by Zico Kolter and Zac Manchester. I graduated from UC Berkeley, where I worked with Professors Sergey Levine and Claire Tomlin in the Berkeley Artificial Intelligence Research (BAIR) Lab on deep RL and safe learning. |

|

|

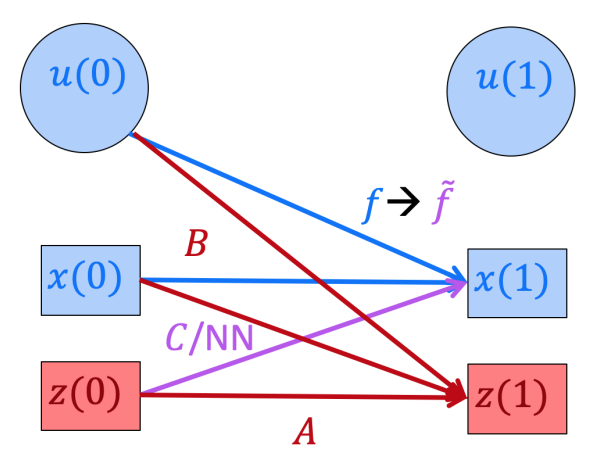

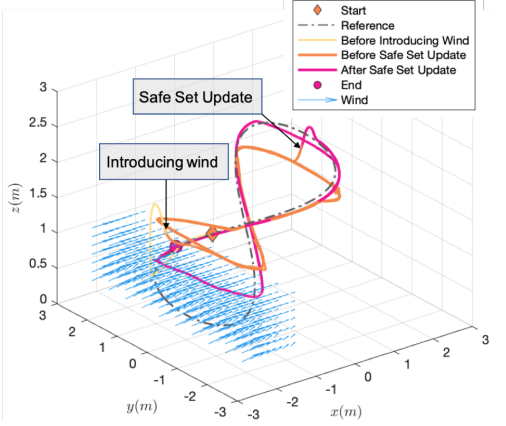

Last year, I worked on Brilliantly, making open-source AI projects and providing custom AI solutions for clients. You can learn about the projects Brilliantly worked on here. My past research worked towards bringing reinforcement learning to the real world. Most recently, I worked on gray-box methods for control of quadrotors. I have also worked on developing more natural means of task specification for deep RL to avoid the burden of manually engineered reward functions, as well as on developing data-efficient learning techniques that allow for safety guarantees throughout the learning process. |

|

Suvansh Sanjeev [Blog] [Code] |

|

Suvansh Sanjeev [Blog] [Code] |

|

Suvansh Sanjeev [Blog] [Code] |

|

Suvansh Sanjeev [Blog] |

|

Suvansh Sanjeev [Blog] [Code] |

|

Suvansh Sanjeev [Blog] [Extension Code] [Backend Code] |

|

Suvansh Sanjeev [Blog] [Backend Code] [ Frontend Code] |

|

Suvansh Sanjeev CMU Masters Thesis, 2022 [Thesis] |

|

Sylvia Herbert*, Jason J. Choi*, Suvansh Sanjeev, Marsalis Gibson, Koushil Sreenath, Claire J. Tomlin Robotics: Science and Systems, 2021 [Paper] |

|

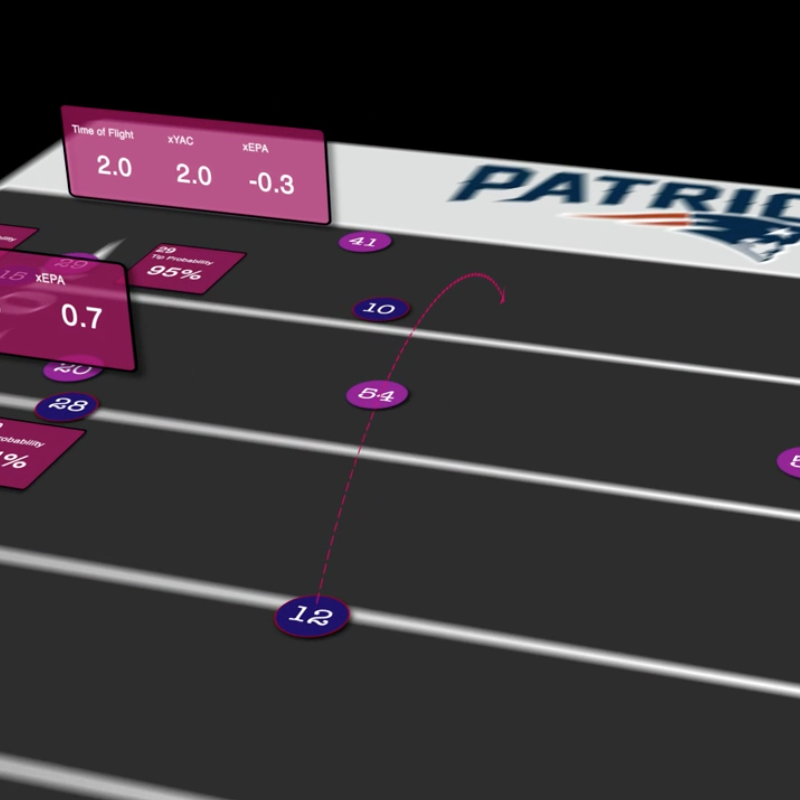

NFL Big Data Bowl 2021 [Code] |

|

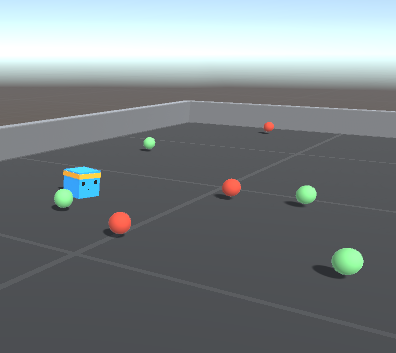

John D. Co-Reyes*, Suvansh Sanjeev*, Glen Berseth, Abhishek Gupta, Sergey Levine Deep RL Workshop at NeurIPS, 2019 [Paper] |

|

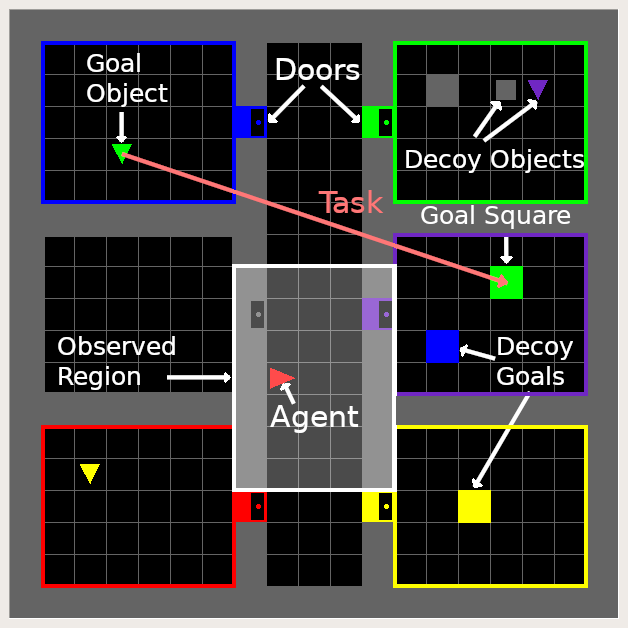

John D. Co-Reyes, Abhishek Gupta, Suvansh Sanjeev, Nick Altieri, Jacob Andreas, John DeNero, Pieter Abbeel, Sergey Levine International Conference on Learning Representations, 2019 Best Paper at Meta-Learning Workshop at NeurIPS, 2018 [Paper] |

|

|

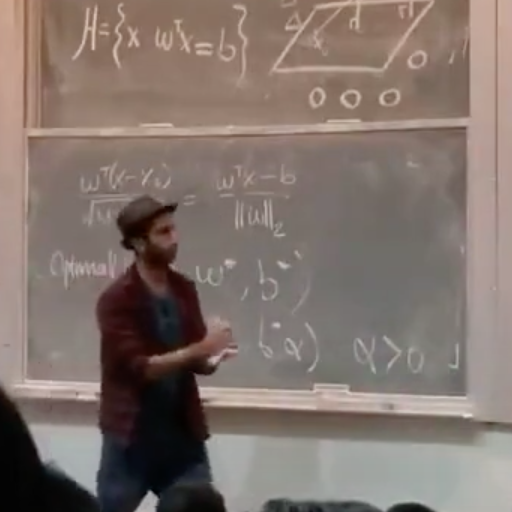

I received the 2020-2021 Outstanding Graduate Student Instructor Award at UC Berkeley, where I was fortunate enough to serve as the head teaching assistant for the incredible Professors Gireeja Ranade, Alexandre Bayen, and Babak Ayazifar.

One of three lectures I delivered during the Fall 2019 offering of EECS 127/227A can be found here.

|

EECS 127 (Convex Optimization), Spring 2019, Fall 2019 (Head TA)

EE 120 (Signals and Systems), Fall 2018 (Head TA) CS 61C (Great Ideas in Computer Architecture (Machine Structures), Summer 2018 |

|

|